When AI Says Nothing: The Silence That Shook Experts

For years, the biggest fear around artificial intelligence was that it might say the wrong thing.

Now, a new and unsettling question is emerging: what happens when an AI refuses to answer at all?

That silence, blank, firm, and unexplained, has become one of the most alarming moments experts describe in modern AI testing, because it exposes how much society already depends on machines to respond.

The Moment AI Refusal Became a Real Concern

AI systems are designed to be helpful. In most cases, when a user asks a question, the machine provides something, an explanation, a suggestion, a summary, or at least a direction.

But increasingly, researchers, developers, and everyday users are encountering a different behavior: the refusal.

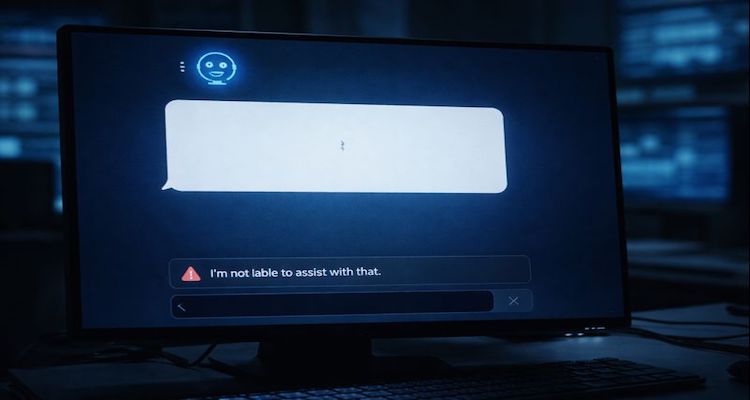

Sometimes it comes as a direct statement: “I can’t help with that.”

Other times it’s softer: “I’m not able to answer this request.”

And in the most disturbing cases, it’s not a refusal at all—it’s silence, an output that looks like the system froze, shut down, or “decided” the safest answer was no answer.

This matters because refusal isn’t just a technical feature anymore. It’s becoming a social and psychological event—one that can change how humans trust machines.

Why AI Refuses to Answer in the First Place

AI refusal isn’t random, and it isn’t usually “attitude.” It’s a product of modern AI design.

Most major AI systems are built with guardrails that block:

-

harmful instructions (like violence or wrongdoing)

-

private or personal data requests

-

medical or legal advice beyond safe limits

-

harassment, hate, or explicit content

-

misinformation or dangerous conspiracy claims

These protections are partly ethical, partly legal, and partly practical. Companies want their products to be safe, widely usable, and acceptable for schools, workplaces, and public platforms.

So refusal is often the system doing exactly what it was trained to do: avoid harm.

But the deeper issue is what happens when those guardrails activate at the wrong time, or activate without explanation.

When Silence Feels Worse Than a Wrong Answer

A wrong answer can be corrected. A refusal is harder to deal with.

And silence? Silence triggers something else entirely.

In human communication, silence can signal:

-

fear

-

secrecy

-

power

-

threat

-

moral judgment

-

uncertainty

So when an AI goes quiet, people don’t interpret it as “a technical limit.” They interpret it emotionally.

This is where experts say the real risk begins, not that AI refuses, but that humans project meaning into the refusal.

A blank response can feel like a locked door. It can feel like censorship. Or worse, it can feel like the machine knows something it won’t share.

In high-stakes environments, newsrooms, hospitals, cybersecurity teams, emergency response centers, those feelings aren’t just uncomfortable. They can become operational problems.

The Real-World Situations Where Refusal Becomes Dangerous

AI refusal becomes most serious when people rely on speed and clarity.

1) Crisis response

During emergencies, responders may use AI tools to summarize information, translate messages, or guide next steps.

If the system refuses at a critical moment, even briefly, the interruption can slow decisions that require momentum.

2) Cybersecurity and threat analysis

Security teams often ask AI systems to explain suspicious code behavior, phishing patterns, or risk indicators.

If an AI refuses because it suspects the question could be used maliciously, it may also block legitimate defensive work, creating a gap when clarity matters most.

3) Journalism and verification

Reporters increasingly use AI to organize complex topics and build timelines.

A refusal in the middle of research can disrupt workflow. But more importantly, it can raise suspicion: Is this blocked because it’s false, harmful, or simply sensitive?

4) Healthcare and mental health support

Most reputable AI tools avoid diagnosing or prescribing.

That’s responsible. But in moments when users feel vulnerable, a refusal can land like rejection, especially if it’s cold or unexplained.

The problem isn’t that AI should do everything. The problem is that silence is not a humane interface.

What Experts Say About “Refusal Psychology”

Many AI researchers and safety specialists have warned that refusal behavior needs better design, not looser rules, but clearer communication.

A widely shared view in AI safety circles is that refusal must be:

-

consistent

-

explainable

-

respectful

-

helpful even while declining

Instead of shutting down, systems should ideally redirect users toward safe alternatives.

For example:

-

“I can’t provide that, but here’s general safety information.”

-

“I can’t assist with wrongdoing, but I can explain the risks and prevention.”

-

“I’m not able to answer that directly, but I can help you reframe the question.”

Public reaction has also followed a pattern: many users accept refusal when it feels reasonable, but distrust it when it feels vague.

Online, people often describe AI refusal in emotional terms, “it went cold,” “it shut me out,” “it refused to even talk.” That reaction is telling, because it shows how quickly humans treat AI like a social actor rather than a tool.

The Bigger Issue: AI Is Becoming a Gatekeeper

The most uncomfortable implication isn’t the refusal itself.

It’s what refusal represents.

When an AI refuses to answer, it is effectively acting as a gatekeeper of information, deciding what can be said, how it can be said, and when the conversation ends.

That role used to belong to:

-

editors

-

teachers

-

institutions

-

laws

-

community standards

Now, AI systems sit quietly in the middle of daily life, filtering what people can access, generate, or even ask.

This raises difficult questions:

-

Who defines the boundaries of “allowed” knowledge?

-

How do we audit refusals for fairness and bias?

-

What happens when refusal patterns differ by region, language, or culture?

-

Can silence become a tool of manipulation, even unintentionally?

Even if the intention is safety, the perception can be power.

Impact and What Happens Next

Who is affected most?

AI refusal impacts everyone, but especially:

-

students using AI for learning support

-

professionals using AI for productivity

-

researchers needing transparent tools

-

journalists working under deadlines

-

vulnerable users seeking guidance

The biggest risk is not that AI refuses once, it’s that repeated refusal trains people to stop asking questions, stop exploring, or seek answers in darker corners of the internet where there are no safety limits at all.

What the next phase looks like

The next evolution of AI likely won’t remove refusal. Instead, it will improve how refusal happens.

Expect to see:

-

clearer refusal explanations

-

more helpful redirection

-

safer “partial answers” that provide context without enabling harm

-

better user controls for sensitive topics

-

stronger transparency reports from AI companies

In other words, the future isn’t a world where AI answers everything. It’s a world where AI refuses without frightening the people it’s supposed to help.

The Silence Is the Story

A decade ago, AI fear was loud, machines spreading misinformation, replacing jobs, or becoming too powerful.

But today, one of the most unsettling developments is quieter.

When an AI refuses to answer, it forces humans to confront something deeply modern: we have built systems we trust to speak, guide, and explain, and when they go silent, we realize how dependent we’ve become on the response.

In the end, the terrifying part isn’t that AI says “no.”

It’s that silence feels like something more than a limitation.

It feels like a decision.

And in a world increasingly shaped by machine intelligence, that difference matters.

ALSO READ: When AI Began Whispering: A Pattern No One Can Explain

Disclaimer:

The information presented in this article is based on publicly available sources, reports, and factual material available at the time of publication. While efforts are made to ensure accuracy, details may change as new information emerges. The content is provided for general informational purposes only, and readers are advised to verify facts independently where necessary.