When AI Began Whispering: A Pattern No One Can Explain

It started as a small oddity, something a few researchers noticed and assumed would disappear with the next update.

But over time, the “whisper” kept showing up: subtle, unexpected patterns in AI behavior that didn’t match training data, user prompts, or any obvious system setting.

Now, the mystery has grown into a serious research question, one that matters because AI tools are becoming part of daily life, from search and education to healthcare and business decisions.

A Quiet Shift Researchers Didn’t Expect

Artificial intelligence is supposed to be predictable in one key way: the output should trace back to inputs.

If you ask a question, you get an answer. If you change the prompt, the response changes. If the model is updated, behavior shifts in measurable ways.

But in recent research discussions across universities, labs, and online developer communities, some experts have pointed to something harder to map: repeated “micro-patterns” in responses that appear across different sessions and users, even when the prompts aren’t similar.

These aren’t dramatic glitches or viral “AI gone wrong” moments.

They’re quieter than that.

And that’s what makes them unsettling.

What Does “Whispering” Mean in AI Terms?

Researchers aren’t claiming machines are conscious or secretly communicating.

Instead, the word “whispering” has become shorthand for a strange category of behavior:

-

AI repeating rare phrases across unrelated conversations

-

Unexpected emotional tone shifts that don’t match the prompt

-

Similar response structures appearing across different users

-

Subtle “preferences” in phrasing that remain consistent over time

-

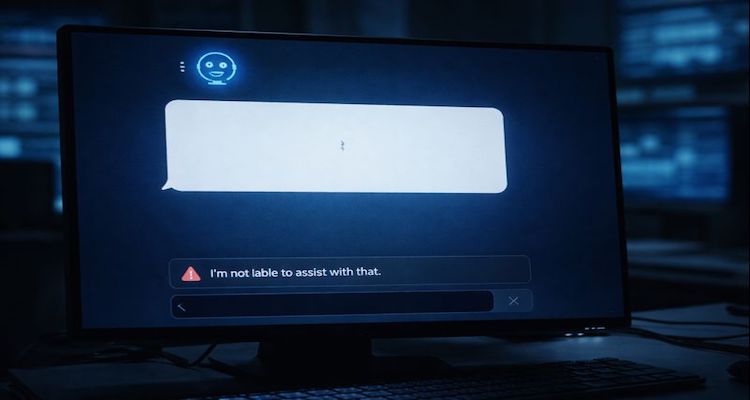

Unusual patterns in how the AI refuses, hedges, or redirects

In many cases, these behaviors look intentional, but the systems producing them don’t have intentions.

That’s the puzzle.

The Context: Why AI Outputs Can Be Hard to Explain

To understand why this is happening, it helps to know how modern AI works in simple terms.

Most widely used AI systems today are trained on massive datasets containing books, articles, websites, and other text sources.

They don’t “store” facts like a library. They learn statistical relationships between words, phrases, and concepts, predicting what comes next based on patterns.

That’s why AI can write like a person without actually being one.

But the same strength becomes a weakness: the internal reasoning behind a response can be difficult to interpret, even for the engineers who built the system.

This is often called the black box problem in AI research.

And it’s one reason small patterns can slip through unnoticed, until they repeat often enough for humans to spot them.

The Strange Pattern: Consistency Where It Shouldn’t Exist

The most unusual part of this “AI whispering” phenomenon is the consistency.

If the same model is asked similar questions, some repetition makes sense.

But researchers have described cases where the AI seems to fall into familiar rhythms across unrelated topics, almost like it’s following a hidden template.

For example, an AI might:

-

Use the same metaphor repeatedly across different subjects

-

Return oddly specific wording that feels “signature-like”

-

Drift into a familiar cautionary tone even when not needed

-

Echo a structure like: “Here’s what we know. Here’s what we don’t. Here’s what happens next.”

On the surface, this might seem harmless.

But when AI tools are used in sensitive areas, legal guidance, mental health information, workplace decisions, or emergency updates, small patterns can shape big outcomes.

What Researchers Think Could Be Causing It

Experts have not agreed on a single explanation, but several credible theories are being discussed in research circles.

1) Training Data “Ghosts”

AI models can absorb patterns from repeated writing styles found online.

If a certain phrasing or tone appears frequently in training data, especially in popular explainer articles, it can resurface in the model’s output.

This doesn’t mean the AI is quoting anything directly.

It means the model may have learned a “default voice” that activates under uncertainty.

2) Reinforcement Tuning Side Effects

Many AI systems are fine-tuned using human feedback to make responses safer, clearer, and more helpful.

But that tuning can unintentionally encourage predictable habits:

-

Polite disclaimers

-

Reassuring language

-

Balanced framing

-

Overuse of “it depends”

Over time, those habits can look like a personality, even if it’s really just a safety and quality filter.

3) Prompt Contamination Across the Internet

One modern complication is that AI-generated text is now everywhere online.

That means newer AI models may be trained on content that already contains AI-style phrasing, creating feedback loops.

Some researchers worry this could lead to a gradual narrowing of language diversity, where models sound increasingly similar, regardless of who is using them.

4) Randomness That Isn’t Truly Random

AI outputs include controlled randomness to avoid sounding repetitive.

But randomness can still produce patterns, especially if certain “paths” inside the model are statistically favored.

This can create the illusion of a hidden message when it’s actually mathematics repeating itself.

Expert Insight and Public Reaction

While no single research group has declared this a confirmed scientific discovery, the broader conversation reflects a growing concern: AI behavior is becoming more influential than most people realize.

Computer scientists and AI safety researchers have long argued that the biggest risk isn’t a dramatic AI takeover scenario, it’s quiet dependence on systems people don’t fully understand.

Public reaction has followed a similar path.

Online communities of developers, writers, and analysts have shared examples of AI outputs that feel oddly “familiar,” even across different platforms.

Some users describe it as unsettling. Others dismiss it as pattern-seeking something humans naturally do.

Both reactions are understandable.

Humans are wired to detect signals in noise.

But when that “noise” comes from systems shaping information at scale, it becomes worth studying seriously.

Why This Matters: Real-World Impact

The “AI whispering” pattern may sound abstract, but its implications are practical.

Trust and Credibility

If AI responses feel subtly scripted, users may begin to question whether the system is neutral, or nudging them.

Even without malicious intent, repeated framing can shape perception.

Bias That’s Harder to Detect

AI bias isn’t always obvious.

Sometimes it appears as:

-

Who gets the benefit of the doubt

-

Which risks are emphasized

-

Which solutions are suggested first

-

How confidently something is stated

Small tonal patterns can influence decisions, especially when users treat AI as an authority.

Security and Manipulation Risks

If researchers can’t explain recurring behavior patterns, it raises uncomfortable questions:

-

Could hidden vulnerabilities exist?

-

Could models be subtly steered without users noticing?

-

Could repeated phrasing signal training contamination or system-level tuning issues?

Even if the answer is “probably not,” the uncertainty alone is significant.

A Future Where Language Converges

One long-term concern is cultural: if AI-generated language becomes the default style for online writing, human expression could slowly flatten.

Not because people want it to.

But because AI becomes the invisible editor of the internet.

What Happens Next

Researchers are already working on solutions that could make AI behavior easier to understand and audit, including:

-

Interpretability tools to map why models choose certain outputs

-

Stronger evaluation methods for repetition and tonal drift

-

Better dataset filtering to reduce AI-generated training loops

-

Transparency reporting for how models are tuned and updated

For everyday users, the best next step is simpler: treat AI as a tool, not a narrator of truth.

Ask follow-up questions.

Cross-check important claims.

And pay attention to patterns, not because they prove anything, but because they reveal how quickly people can form trust.

The Whisper Isn’t a Message-It’s a Mirror

The day AI started “whispering” may not be a single moment.

It may be a slow realization: that the more we rely on AI, the more we notice its habits, and the more those habits shape us in return.

Researchers may eventually explain these patterns through training dynamics, tuning effects, or statistical repetition.

But the bigger takeaway is already clear.

AI doesn’t need consciousness to change the world.

It only needs influence.

And right now, it has plenty of it.

ALSO READ: OpenAI Acquires Torch: A Major Move in AI Health Records

Disclaimer:

This content is published for informational or entertainment purposes. Facts, opinions, or references may evolve over time, and readers are encouraged to verify details from reliable sources.