Earning Trust in the Age of AI: Navigating Truth in a Synthetic World

In a digital world flooded with AI-generated content, building trust is crucial. Discover strategies, expert insights, and ethical practices to restore credibility.

Introduction: The New Age of Doubt

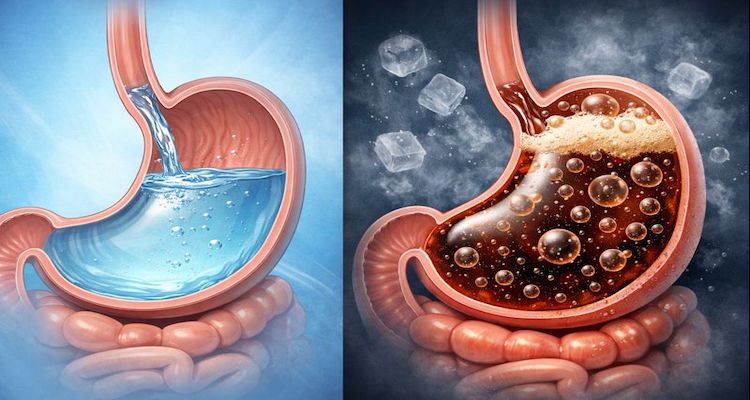

Imagine reading a breaking news article, an emotional open letter, or even a heartwarming story—only to find out it was entirely crafted by artificial intelligence. As machine-generated words flood the internet, a growing question looms: Can we still trust what we read online?

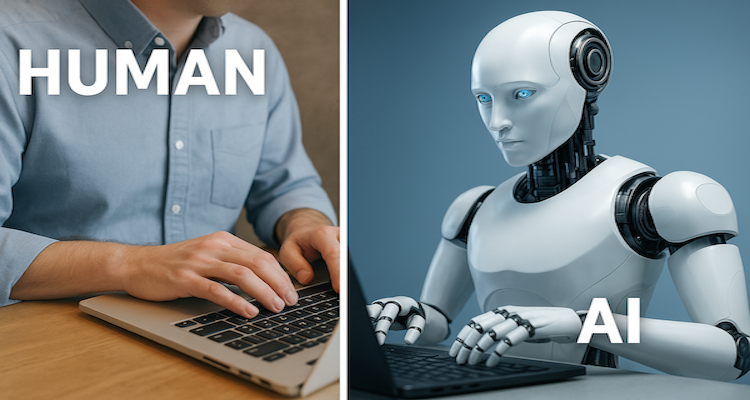

In an era where deepfakes, chatbots, and algorithmic writing tools are becoming indistinguishable from human output, the challenge isn’t just about identifying truth—it’s about rebuilding belief. How can creators, journalists, and platforms foster trust when authenticity itself is being redefined?

Context & Background: The Rise of the Machines—and the Mistrust

Artificial intelligence has revolutionized content creation. Tools like ChatGPT, Gemini, and Claude now generate everything from news reports to poetry, marketing campaigns to scientific abstracts. While their capabilities save time and enhance productivity, they also introduce a chilling ambiguity—what’s real, and what’s not?

Recent studies reveal that over 50% of internet users are unable to distinguish between AI-written and human-authored content. Worse, disinformation campaigns—often powered by AI—have been weaponized to mislead public opinion, influence elections, and propagate conspiracy theories.

The World Economic Forum ranked “misinformation and disinformation” among the top global risks for 2024. At the heart of this risk lies one common thread: eroding trust.

Main Developments: From Content Flood to Credibility Crisis

The last two years have seen an unprecedented surge in AI-generated media. Brands automate product descriptions. News sites experiment with AI-assisted reporting. Students use it for homework; influencers for captions; scammers for phishing emails.

This tidal wave of synthetic content has triggered a credibility crisis. A 2024 Reuters Digital News Report noted a marked decline in public trust toward online media, particularly in regions where AI-generated content is prevalent and unregulated.

In response, several global platforms have started tagging AI-generated content, while countries like the U.S., EU, and India are pushing for legislation requiring transparency in digital content creation. However, these steps—while significant—barely scratch the surface of the deeper societal issue: trust is easier to break than to rebuild.

Expert Insight & Public Reaction: Balancing Innovation with Integrity

“AI is not the enemy,” says Claire DeMont, a digital ethics researcher at the Massachusetts Institute of Technology. “The problem is opacity. Readers need to know who—or what—is speaking to them.”

She’s not alone. A Pew Research Center poll in early 2025 found that 68% of respondents favor mandatory labeling of AI-generated content. Meanwhile, journalists and educators are demanding clearer ethical standards for responsible AI use.

Social media has amplified both anxiety and activism. Hashtags like #HumansFirst and #VerifyTheSource trend regularly as users urge platforms to adopt digital watermarks or blockchain-based verification tools.

Some publishers, like The Guardian and The New York Times, have taken a hybrid approach—using AI for data crunching but keeping human writers at the narrative helm, always with a byline indicating authorship. “Transparency builds trust,” says Jordan Malik, head of editorial at a major news outlet. “Readers deserve to know if a human is behind the words.”

Impact & Implications: What’s at Stake?

The implications extend beyond journalism. In sectors like finance, healthcare, law, and academia, the trustworthiness of content can have life-altering consequences. A misdiagnosis from an AI chatbot, a legal brief written without oversight, or a fraudulent investment article could cause real harm.

For brands, reputation is on the line. Companies that fail to disclose their AI use risk backlash, boycotts, and lawsuits. On the other hand, those that adopt transparent policies and highlight ethical AI use are earning customer loyalty.

Education systems, too, are evolving. Universities are rethinking plagiarism policies and integrating AI literacy into curriculums. Some now teach students not just how to write—but how to critically read in a synthetic world.

Even regulatory frameworks are evolving. The EU’s AI Act, expected to go fully into effect by 2026, mandates clear AI disclosures and risk assessments for content-generation tools. Countries like Australia and Canada are drafting similar laws to ensure digital accountability.

Conclusion: Toward a Trustworthy Digital Future

In the race between AI advancement and human discernment, trust is the battleground. But building it isn’t just about more rules—it’s about restoring relationships. Between reader and writer. Brand and consumer. Human and machine.

Transparency, verification, and ethical storytelling are not optional—they are essential pillars of credibility in this new digital era.

As we move forward, one principle must guide us: The future of content isn’t just about who creates it—it’s about who believes in it.

⚠️ (Disclaimer: This article is intended for informational purposes only and reflects current industry trends and public sentiment as of May 2025. It does not constitute legal, ethical, or technological advice. Always consult qualified professionals for decisions involving AI, content verification, or digital trust strategies.)

Also Read: Staying Human: Creators vs. Machines in the AI Age